Transforming Reading Into Active Study with AI Questions

Designing an AI powered study experience inside of an eTextbook.

Awards

2023 CODiE Award for “Best Use of Artificial Intelligence in Ed Tech” 🔗

2024 Learning Impact Gold Award 🔗

2025 Global Tech Award 🔗

Role on the team: Facilitated cross-product alignment and drove the experience strategy through design thinking, information architecture, and iterative design.

Deliverables: MVP prototypes for validation and delivery, thought leadership through principles, frameworks, and decision-making guidance. Dev specs and handoff documentation.

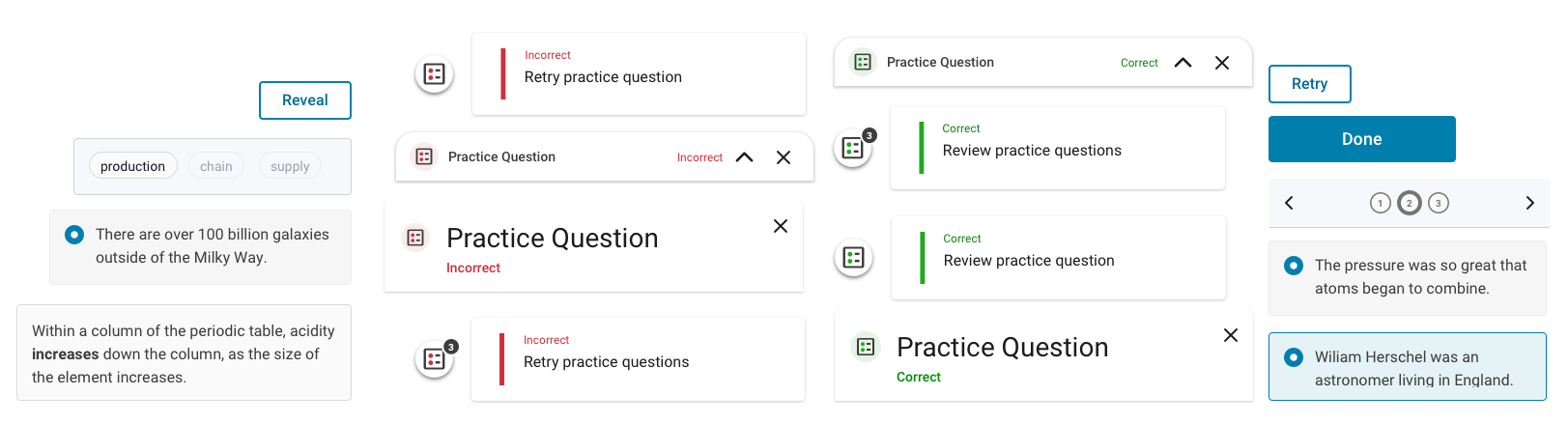

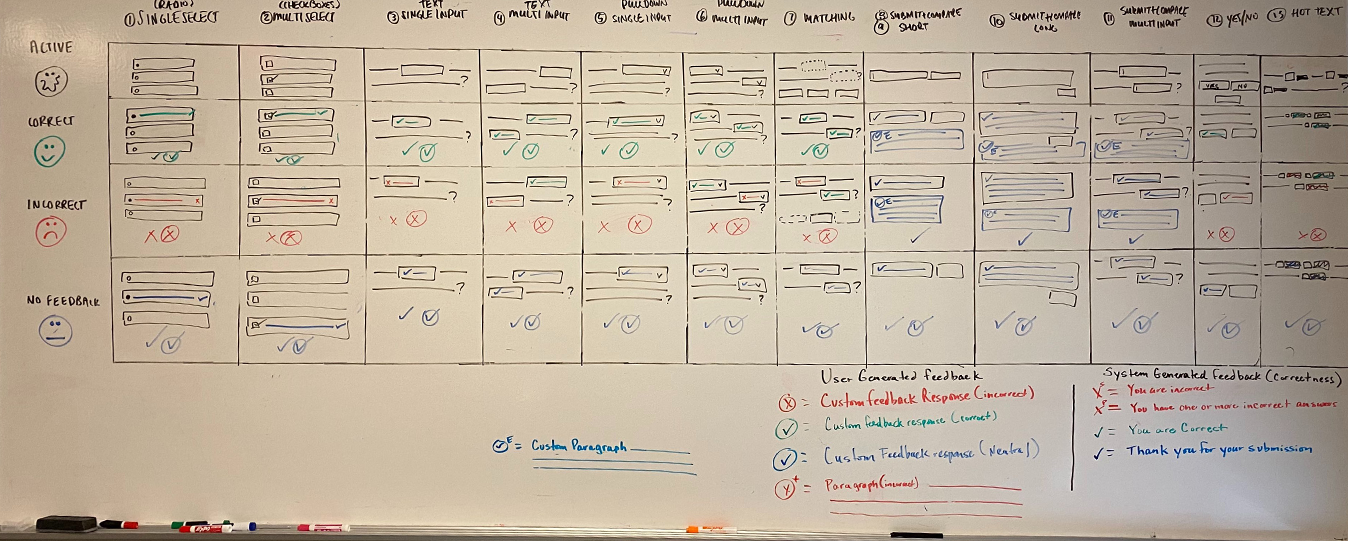

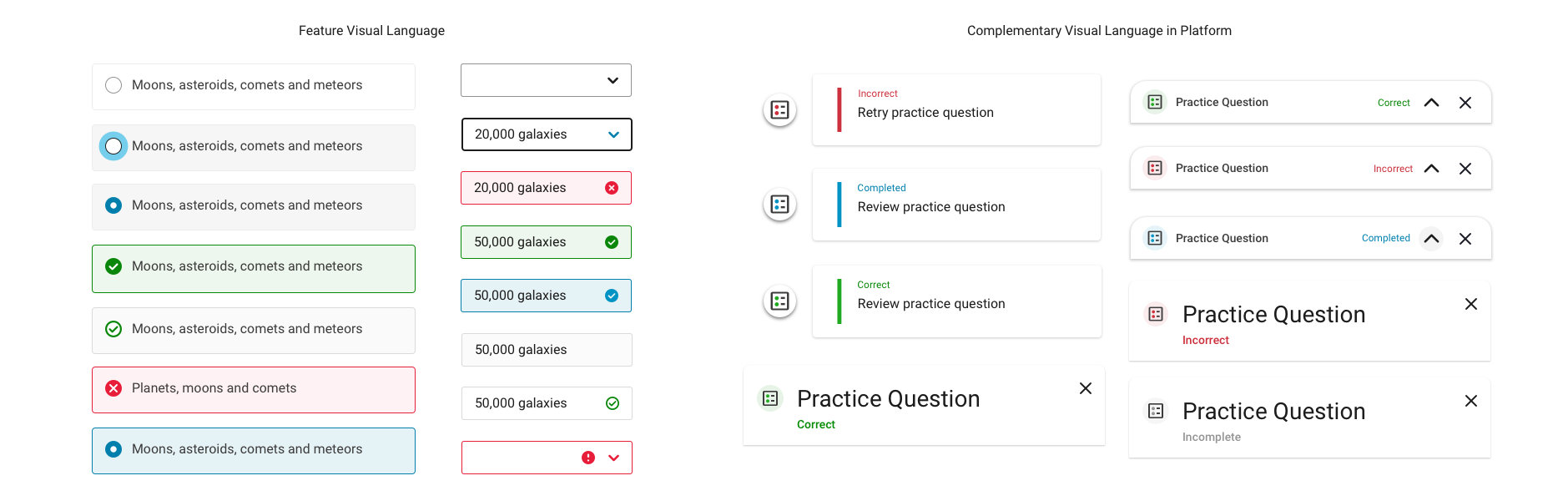

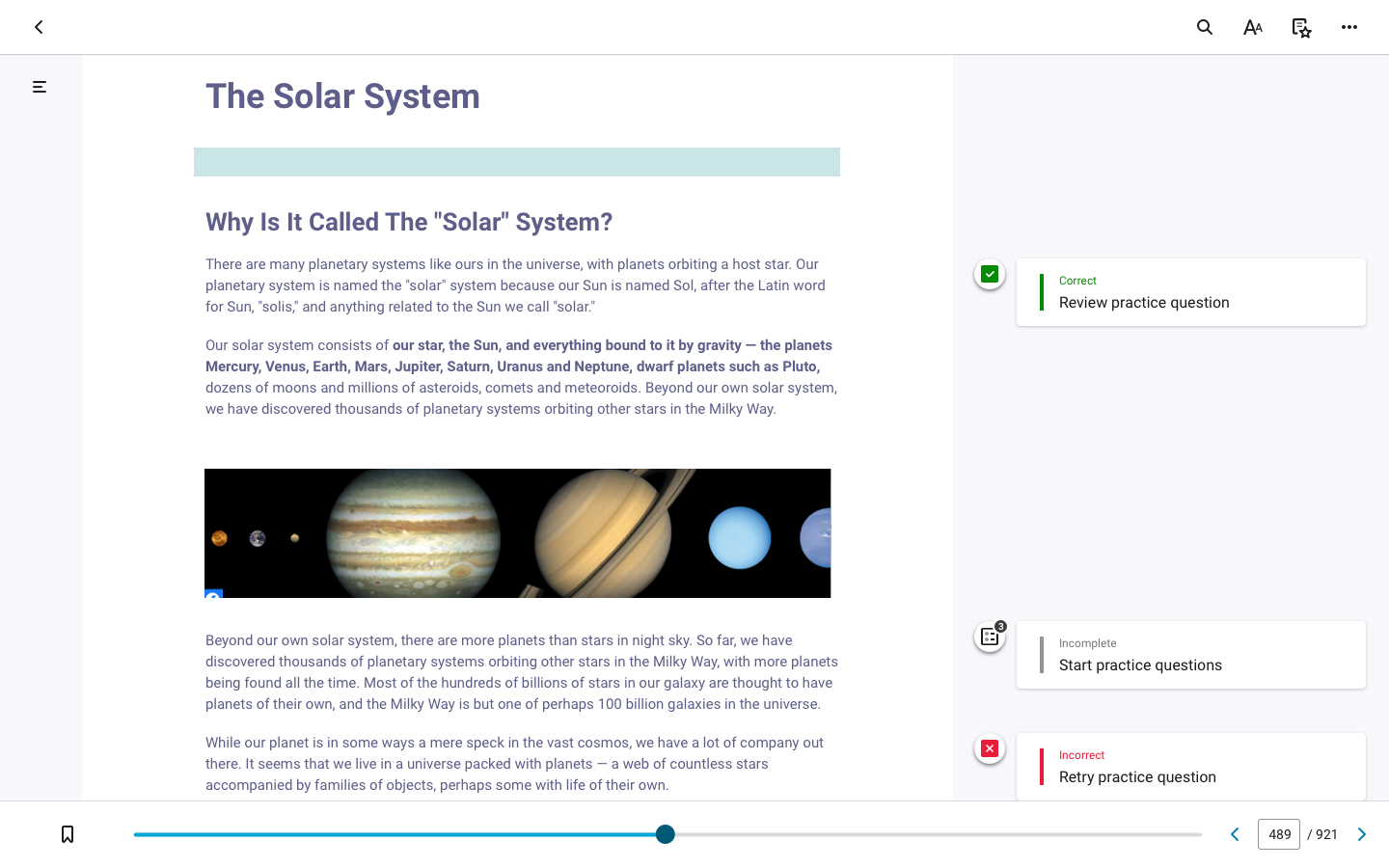

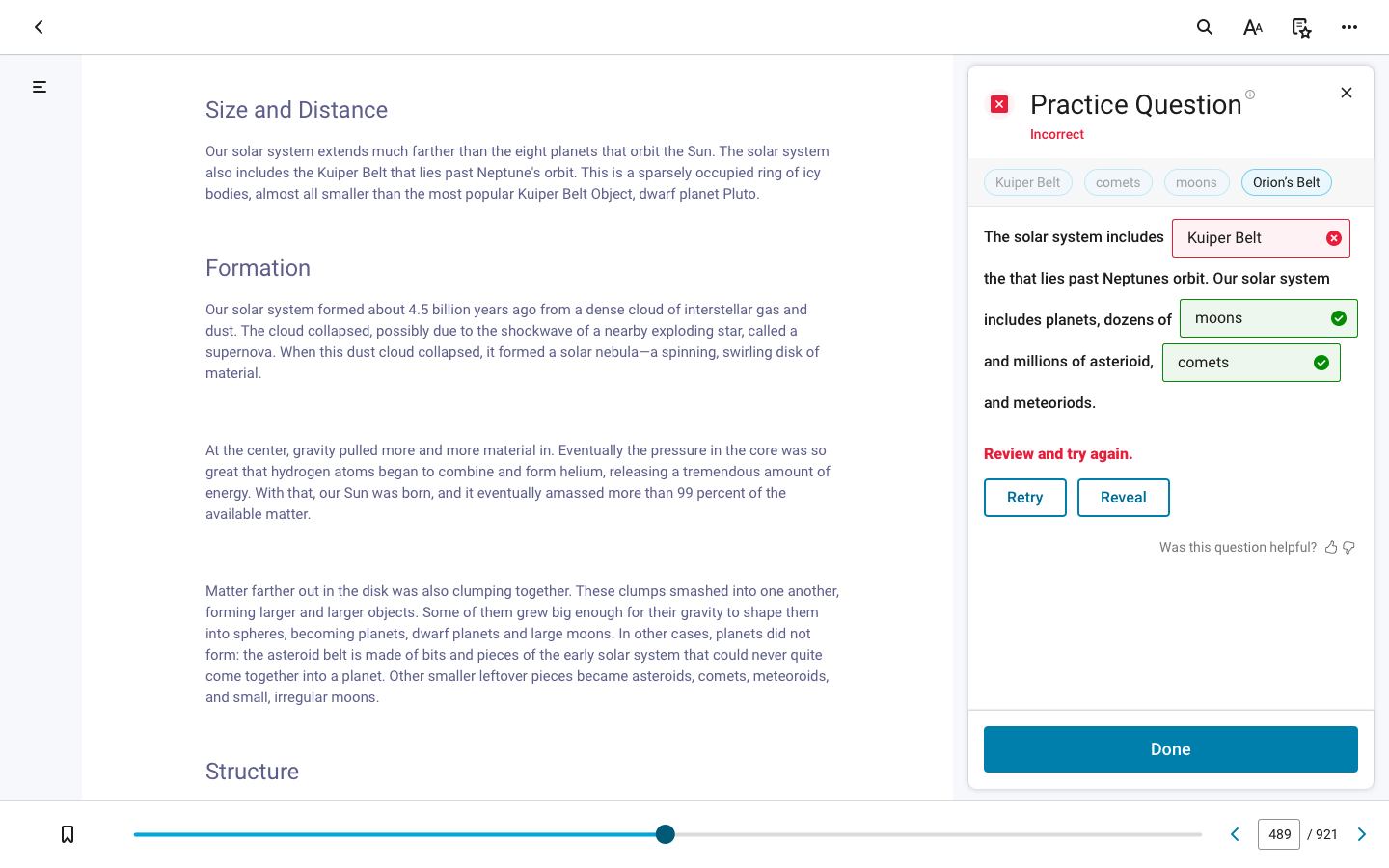

Unified Feedback Across AI-Generated Question Types

The initial challenge was creating a unified experience across 15 distinct question types, each with different functionality and multiple states of correctness. From a design perspective, that meant defining a consistent visual feedback system that could support a range of interactions without overwhelming the learner.

We applied the same status driven visual language across both question feedback and the supporting feature components integrated throughout the platform. This approach reinforced consistency, lowered cognitive load, and helped students quickly understand and navigate varied question formats with confidence.

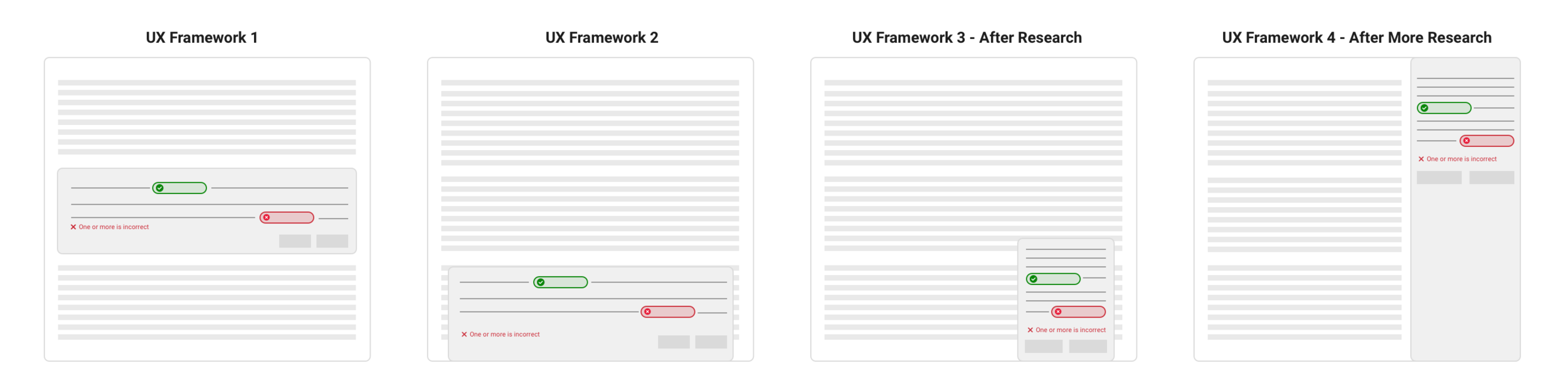

Prototyping Functional Solutions

Together, these explorations helped us evaluate where questions should live (gutter vs drawer vs chat), how much they should interrupt the learner, and what mechanisms best support both discovery and long-term progress tracking.

In-Context Alerts + Full-Width Drawer (Tested with users)

Users are notified when a question appears via either a full-width nudge at the bottom of the screen or a card in the gutter (when expanded). Selecting either entry point opens the question in a full-width drawer.

Usability goals + assumptions:

Strategically placed alerts will increase engagement while the spacious drawer preserves reading context and supports all question types without crowding the gutter.

Conversational Assistant (Chatbot-Led Experience)

Students can answer questions through an integrated chatbot that becomes active when a new question appears. The bot “lights up” as a prompt to engage, and students can scroll through chat history to review previous questions and track progress.

Usability goals + assumptions:

A conversational interface offers a more interactive study experience, increasing engagement autonomously and creating clear value beyond a standard eTextbook and tutor model—positioning the feature as a differentiated learning experience.

Progress Tracking in the Scrubber + Inline Gutter Questions

Students track progress using icons in the scrubber, showing correct, incorrect, and unanswered questions across the full book like a timeline. Questions appear inline in the gutter next to relevant content. If the gutter isn’t open when a student reaches an unanswered question, the icon animates or nudges to draw attention.

Usability goals + assumptions:

Inline questions reinforce comprehension by connecting prompts directly to the content, while subtle animations and timeline indicators drive engagement and signal a richer, more interactive reading experience.

TOC Based Discovery + Status Indicators

Question status indicators appear directly within each Table of Contents section. When a question is available, the bottom bar expands and opens the question in a full-width drawer, allowing learners to jump directly to questions from the TOC.

Usability goals + assumptions:

Since the TOC is one of the most-used reader features, enhancing it creates immediate value and saves time. Status indicators support faster navigation and increase engagement through persistent visibility of available questions.

Persistent Timeline and In-View Questions

Learners see correct, incorrect, and unanswered questions in the scrubber as a timeline for the entire book. Questions live directly beside relevant content in the gutter, with nudges to prompt engagement if students scroll past without interacting.

Usability goals + assumptions:

Keeping questions in-view supports a natural study rhythm. Nudges act as a lightweight prompt to engage without interrupting reading flow.

Iterating Informed by User Testing

We conducted three rounds of usability research to guide how AI-generated questions should appear within the eTextbook experience. Across 24 participants and three distinct UX scenarios, findings consistently pointed to one direction: questions needed to live alongside the content so students could read and respond without losing context. Key insight: Keeping questions in-context reduced navigation friction and helped students maintain comprehension while engaging with the feature.

Feature Release and Student Impact

Within the first year of rollout, the feature generated over 2 million unique study questions and was used by hundreds of thousands of students. Powered by AI, it identifies key learning content, generates practice questions directly from the text, and surfaces them alongside the eTextbook in the reader, turning passive reading into an active study experience. Early feedback reinforced the value of the experience: 95.9% of students who used assigned practice reported the questions were helpful for learning and assessment preparation.